install Cython.

$ sudo pip install Cython

when installing darkflow at the beginning, install it through the method that enables code changes to be applied.

pip install -e .

the original source code only contains parser for VOC as an example. However, by taking it as a reference, creating a custom json parser that will interface properly with the existing system is easy.

if you do not pass on the json parsed attributes to the system properly, it may give an wrong result like the following:

Dataset of 4 instance(s)

Training statistics:

Learning rate : 1e-05

Batch size : 4

Epoch number : 2

Backup every : 2000

step 1 - loss nan - moving ave loss nan

Finish 1 epoch(es)

step 2 - loss nan - moving ave loss nan

Finish 2 epoch(es)

You can see that the system is not able to calculate the loss properly thus, giving nan instead.

A few mistakes that a user can make is 1) did not properly stringify the string of json values, 2) mixed order in x,y values

['007081.jpg', [500, 375, [['dog', 152, 84, 281, 315], ['person', 32, 84, 223, 351], ['person', 259, 105, 500, 375]]]]

this is a proper sample of dumps that is passed on to the system.

If the user doesn’t str() the json string values, it would give something like this:

[u'testimage1.png', [1920, 1080, [[u'car', 898, 544, 591, 390]]]]

Another case is when the user mistakes the order of x,y values. The order should be (xmin, ymin, xmax, ymax). You can see that the wrong example given above has got the min/max the other way around.

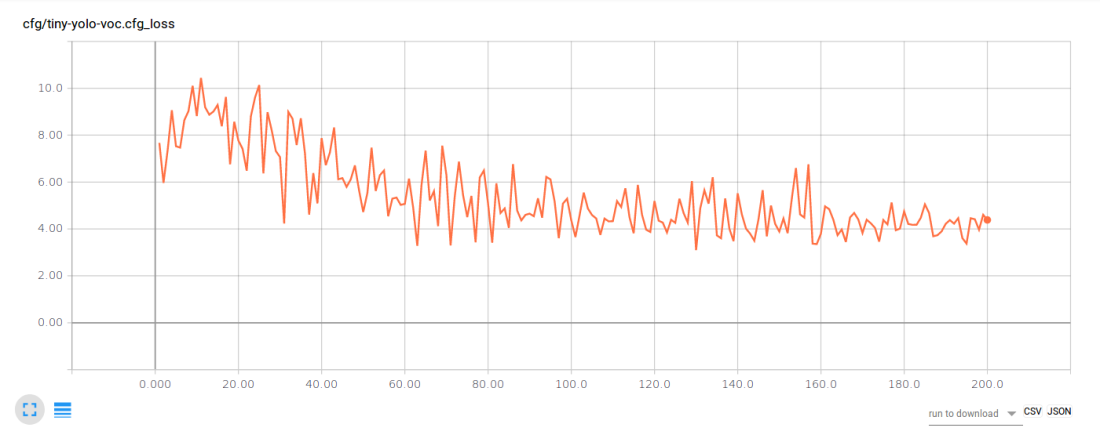

After fixing these minor mistakes, the loss calculation works fine.